A newsletter for modern marketers.

TRUSTED BY THE WORLD'S SMARTEST MARKETERS

Free ad performance benchmarks every week.

Live webinars and strategy sessions with industry leaders.

Watch all previous episodes on our YouTube channel.

Insider strategies from the smartest brands in ecommerce.

SEE LATEST POSTS:

5 Takeaways From Moderating Our Clicks + Deterministic Views Webinar With Leading Platforms

Spending an hour with leaders from Meta, TikTok, Pinterest, Snap, MNTN, and Vibe was a rare chance to compare notes on how people actually buy in 2026, and how far measurement still needs to catch up.

Here are the five takeaways I walked away with that matter most for performance teams trying to make sense of cross-channel reality.

See the full webinar recording here.

Want to learn more about Clicks + Deterministic Views? Download our guide for a full breakdown of the solution.

Takeaway 1: The hard work is alignment between models and between marketing and finance

The last big theme was alignment on two levels:

1. Measurement stack alignment.

No one on the panel believes in a single, perfect source of truth anymore. The teams that feel most confident are triangulating:

- MTA (including C+DV) for day-to-day trading and intra-channel decisions

- MMM for longer-horizon allocation across channels, geos, and time

- Incrementality testing for causal clarity when the stakes or spend justify it

In this stack, C+DV becomes the connective layer: verified platform signals (clicks + deterministic views) and real revenue living in the same place, so MMM and experiments can actually inform how you bid and budget tomorrow.

2. Org alignment between finance and marketing.

A recurring tension we heard:

- CMOs and performance leads often know TikTok, Pinterest, Snap, and CTV are working because they see the lift in demand and new customers.

- CFOs are staring at last-click or last-click reports that say the opposite.

Deterministic views help close that gap because they:

- Reconcile to your actual ecommerce revenue, not a separate number

- Deduplicate credit across channels, so you’re not double-counting

- Make it clear why organic search and direct might lose credit when you reveal the upstream exposure that actually created demand

If you’re going to shift your attribution model, the most important work is up front: setting expectations that numbers will rebalance, agreeing on the windows that make sense by channel, and being transparent about why the story is changing, not just that it changed.

The brands I’m most bullish on are the ones willing to admit that clicks aren’t the full story, then do the hard work of operationalizing that truth in how they measure, report, and invest.

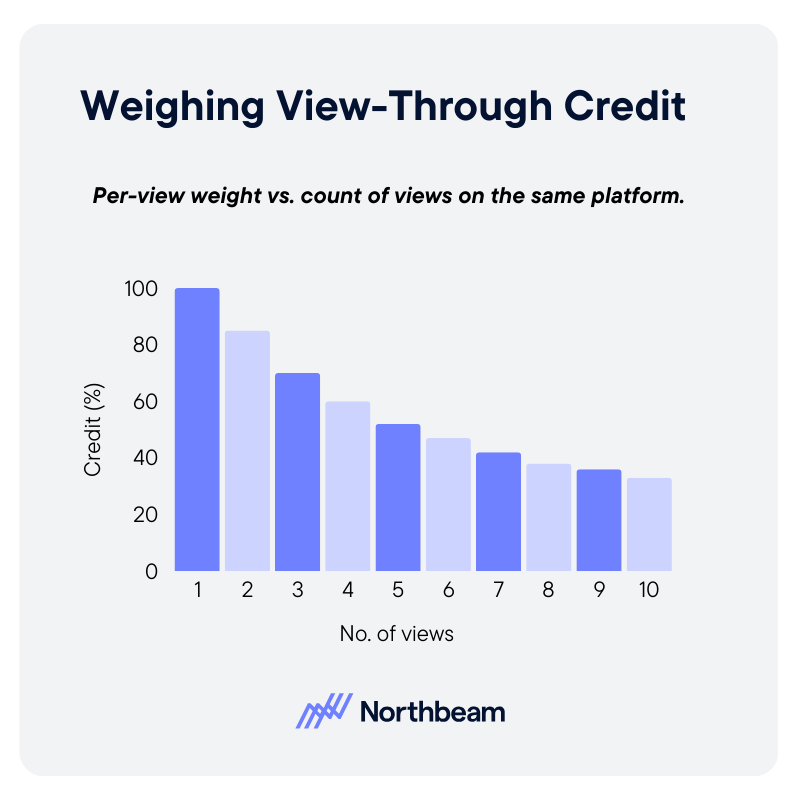

Takeaway 2: “View-through” only helps if the views are deterministic and reconciled to revenue

Most performance teams have a love/hate relationship with view-through metrics.

Everyone knows views matter. But “lift” that sits on top of already-inflated platform numbers is hard to defend in a room with your CFO.

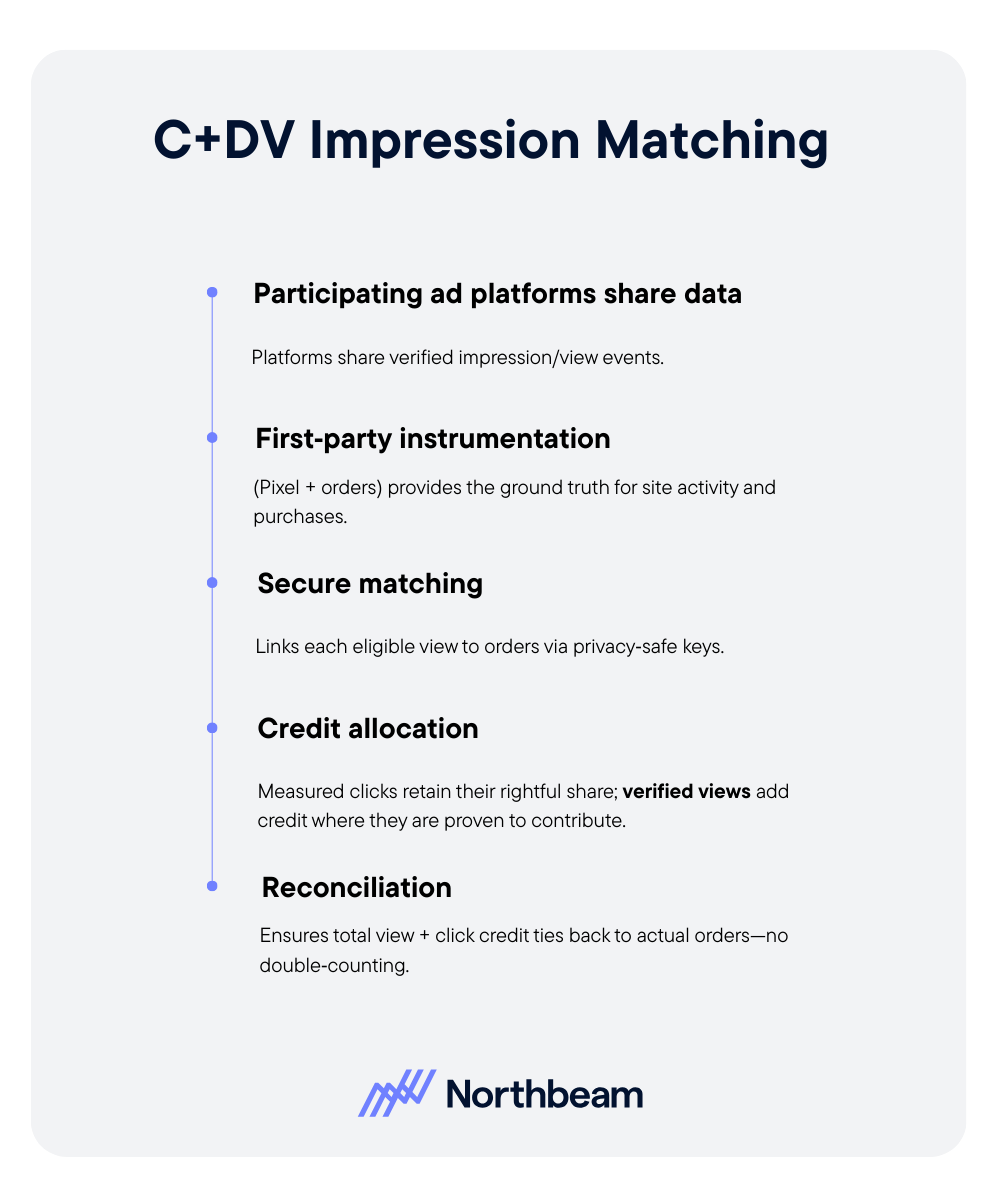

What we discussed on the webinar was a more grounded approach: deterministic views joined to real orders.

In practice, that means:

- Exposure captured by first-party data, and shared via what I like to think of as "cleanroom" integrations

- Matched back to actual orders via order IDs and hashed emails

- Joined at the user level, so each order has a concrete exposure history

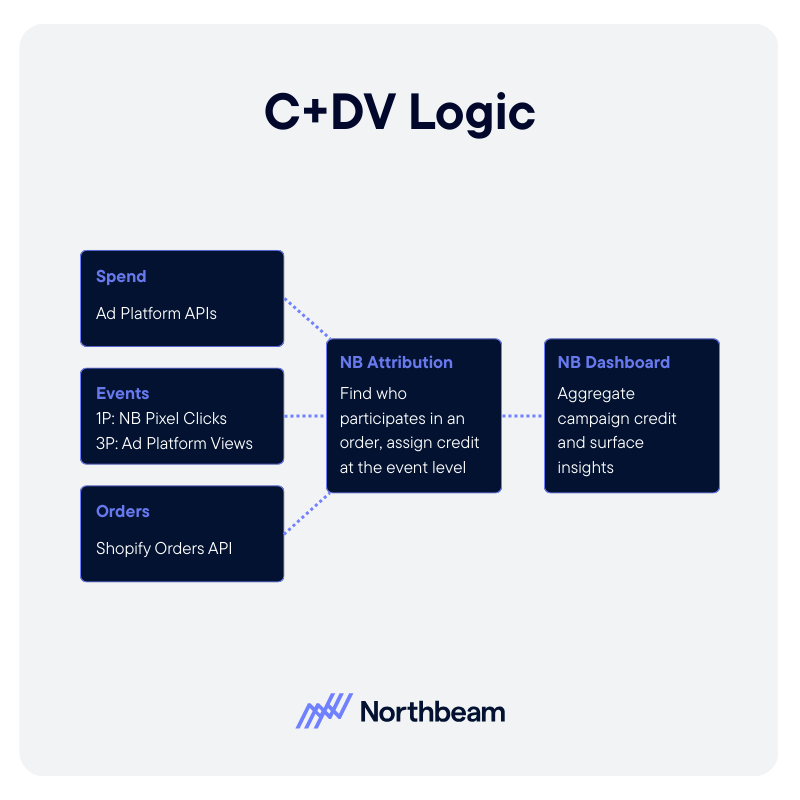

That’s the core idea behind C+DV (Clicks + Deterministic Views):

- Keep the deterministic click backbone. Clicks don’t go away.

- Add only verified views from partner platforms. If we didn’t see it deterministically, we don’t use it.

- Reconcile everything to ecommerce revenue, fully deduplicated. Each order can only be worth 100% of itself, no matter how many touchpoints it had.

Done right, this turns “view-through” from a fuzzy add-on into a second layer on top of clicks that closes the gap between how people behave and what your reporting shows.

Takeaway 3: Upper-funnel channels, like CTV, are more undervalued than most teams think

Once you start layering deterministic views on top of clicks, a consistent pattern shows up:

- Campaigns that specialize in discovery and demand creation look meaningfully better than last-click tools suggested.

- Channels that were already doing heavy lifting (Meta, TikTok, Snap, Pinterest) gain share once their view-driven impact is visible.

- CTV moves from “brand line item” to measurable performance input. You can’t click your TV, so in a last-click system CTV basically didn’t exist. With deterministic views, you can finally see how often CTV shows up before the search, the retargeting impression, or the final click.

Lag analysis reinforces this. For CTV, one-day windows undercount badly; by the time you look at 7–30 day windows, CTV often shows up as one of the most important touchpoints in the path.

The exact lift will vary by brand and channel. The deeper point is structural: your current “single source of truth” is probably biased toward the bottom of the funnel. C+DV is one way of correcting for that by making genuine upper-funnel influence visible in the same framework as search and social.

Takeaway 4: When you see beyond the click, your creative and audience decisions change

A subtler, but important, impact is what happens to creative learning once you can see view-based influence.

In a last-click world, it’s rational to over-index on a small set of “safe”, hyper-clicky creatives and formats. Anything that does more upper-funnel work but fewer immediate clicks looks like a loser.

With deterministic views in the model, you can start to ask better questions:

- Which creative themes reliably show up early in high-value paths, even if they don’t win the last click?

- Which placements and environments (TikTok, Reels, Stories, Pinterest, Snap, CTV) are quietly seeding the audiences that later convert elsewhere?

- How should we evaluate creators and content partners when we can see their upstream influence, not just CTR?

That doesn’t mean clicks stop mattering. It means you finally have permission to invest in true discovery and storytelling, because you can see how those exposures pay off downstream across channels.

Takeaway 5: Clicks still matter, but they systematically miss the moments that create demand

Everyone on the panel saw the same story from a slightly different angle.

- A shopper sees a CTV ad, gets interested, and later searches the brand.

- They scroll past a TikTok video, save an idea on Pinterest, or watch a Story on Snap without clicking.

- Eventually they convert from a search ad or a direct visit days or weeks later.

If your source of truth only counts what got clicked, it’s going to:

- Over-weight the last touch

- Over-fund bottom-funnel

- Underestimate the channels that actually generate net-new demand

That’s true whether you’re Meta, TikTok, Pinterest, Snap, or a CTV partner like Mountain and Vibe. The journeys they see in-platform simply don’t line up with what last-click tools are crediting.

The final, simple takeaway: clicks are necessary, but no longer sufficient, as the backbone of your measurement.

Want to learn more about Clicks + Deterministic Views? Book a demo with us.

SaaS Email Marketing: Strategies to Onboard, Engage, & Retain Your Users

SaaS email marketing plays a critical role in how users activate, engage with, and continue using a product over time.

Unlike traditional email campaigns focused on promotions or announcements, effective SaaS email strategies are deeply connected to product usage and user behavior across the customer lifecycle.

In this guide, we break down how SaaS teams can design email programs that support onboarding, drive feature adoption, and improve retention.

You will learn how to build lifecycle-aware email flows, measure performance using product and revenue metrics, and use attribution to understand how email contributes to activation, upgrades, and long-term growth.

Why Email Marketing is Critical for SaaS

For SaaS businesses, growth does not end at acquisition. A new signup only becomes valuable when that user activates, adopts key features, and continues to use the product over time. This makes the post-signup experience just as important as demand generation, and email remains one of the most effective channels for influencing what happens after the first click.

Done well, email helps users onboard faster, guides them toward deeper usage, and supports retention and renewal, all at a relatively low cost compared to paid media or sales-driven outreach.

SaaS email marketing also looks fundamentally different from traditional email marketing. Instead of periodic newsletters or one-off promotional blasts, SaaS teams rely heavily on automation and behavior-based triggers.

Emails are sent in response to real user actions, or inaction, such as signing up for a free trial, skipping a key setup step, trying a feature for the first time, or going inactive for a set period.

Because of this tight connection to the product, email performance in SaaS must be measured differently as well. Open and click rates provide limited insight on their own. To understand impact, email campaigns need to be directly linked to user actions, product metrics, and value events like activation, feature adoption, upgrades, and retention.

When email is connected to these downstream outcomes, it becomes a measurable growth lever rather than just a communication channel.

Lifecycle Phases & Email Strategy Breakdown

| Lifecycle Phase | Goal | Key Tactics |

|---|---|---|

| Onboarding & Activation | Help new users reach their first "aha" moment; reduce time-to-value and increase activation rate | Welcome emails; setup checklists; feature walkthroughs; milestone emails; structured sequences |

Effective SaaS email marketing is built around the user lifecycle. By dividing the journey into clear stages, teams can design email strategies that meet users where they are, reinforce the right behaviors at the right time, and move them steadily toward long-term value.

Onboarding & Activation

Goal

- Help new users reach their first “aha” moment quickly

- Reduce time-to-value and increase activation rates

Key tactics

- Welcome email or short welcome sequence

- Setup checklists to guide initial configuration

- Feature walkthroughs focused on core value drivers

- Milestone emails that acknowledge progress and reinforce momentum

- Structured sequences that move from welcome → activation → feature introduction → progress tracking

Email trigger examples

- User signs up or starts a free trial

- User does not complete a key setup step within a defined timeframe

- User completes their first major product action

Metrics to track

- Activation rate

- Time to first value

- Drop-off rate before the first key action

Engagement & Adoption

Goal

- Deepen product usage

- Encourage habitual behavior

- Unlock premium features and grow value per user

Key tactics

- Behavior-based emails suggesting next features or actions

- “You haven’t tried X yet” nudges based on usage gaps

- Educational content, tutorials, and best practices

- Case studies or use cases aligned to the user’s role or plan

- Milestone celebrations to reinforce progress and consistency

Email segmentation examples

- Usage frequency (power users vs light users)

- Plan tier or subscription level

- Features used vs features not yet adopted

Metrics to track

- Active user counts

- Feature adoption rates

- Session recency and frequency

- Free-to-paid conversion rate, if applicable

Retention, Renewal, & Re-Engagement

Goal

- Prevent churn

- Drive renewals and expansions

- Reclaim inactive or at-risk users

Key tactics

- Win-back campaigns triggered by inactivity

- Renewal reminders tied to subscription timelines

- Loyalty or appreciation emails for long-term customers

- Referral or advocate-driven campaigns

- Upsell and cross-sell messages based on demonstrated usage

Metrics to track

- Churn rate

- Renewal rate

- Aggregate lifetime value

- Reactivation rate of dormant accounts

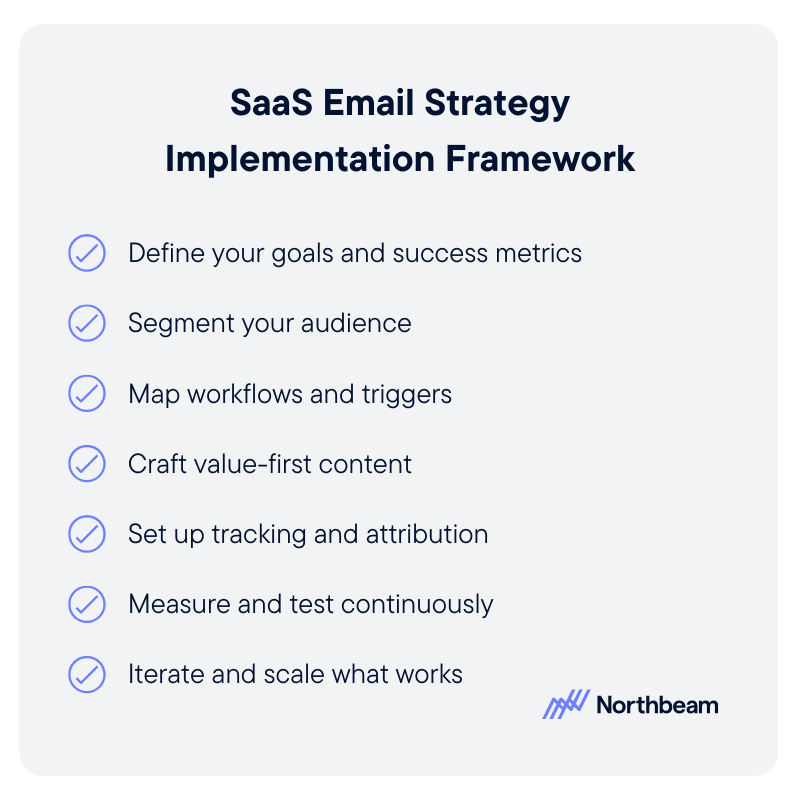

Email Strategy & Implementation Framework

This framework outlines the core steps teams should follow to design, measure, and scale lifecycle-driven email programs.

1. Define Your Goals and Success Metrics

Start by clearly defining what success looks like for each lifecycle stage. Set specific targets for activation rates, reductions in churn, increases in feature adoption, or improvements in conversion from free to paid.

These goals should be directly tied to business outcomes, not just email engagement metrics, so performance can be evaluated in terms of real impact.

2. Segment Your Audience

Segment users based on meaningful differences in their journey and behavior. Common segmentation dimensions include lifecycle stage, product usage patterns, plan tier, and features used.

Strong segmentation ensures that emails remain relevant and timely, which is critical for driving behavior change rather than fatigue.

3. Map Workflows and Triggers

For each lifecycle stage, define the email sequences, triggers, and conditional logic that determine when messages are sent. Identify primary triggers, such as signups or inactivity, as well as fallback flows for users who do not complete key actions.

Clear workflow mapping helps ensure users receive the right message at the right time.

4. Craft Value-First Content

Tailor email content to the user’s current needs and level of maturity. Onboarding users may need guidance and reassurance, while power users may benefit from advanced tips or new features.

Focus on delivering value and clarity rather than promotional messaging, especially in early and mid-lifecycle stages.

5. Set Up Tracking and Attribution

Connect email engagement data to downstream product events such as setup completion, feature usage, upgrades, or renewals. This linkage allows teams to attribute changes in activation, retention, and revenue directly to specific email flows and campaigns.

6. Measure and Test Continuously

Monitor performance across both email-level metrics and product outcomes. Track open and click rates alongside conversion events and drop-off points. Use A/B testing to refine subject lines, content, and workflows based on measurable improvements.

7. Iterate and Scale What Works

Use performance insights to refine segmentation, introduce dynamic content, and expand behavior-based triggers. As programs mature, teams can scale successful flows and explore AI-driven personalization to further optimize relevance and impact.

Attribution & Performance Metrics Specific to SaaS Email Marketing

To understand whether email is truly driving growth in SaaS, teams need to measure performance through the lens of product behavior and revenue outcomes, not just email engagement.

Focus on Business Outcomes, Not Vanity Metrics

Open and click rates offer limited insight on their own. In SaaS, email success should be measured by its impact on activation, feature adoption, upgrades, retention, and churn reduction.

These metrics reflect whether emails are influencing meaningful user behavior and long-term value.

Account for Multi-Touch Attribution Complexity

Email typically works alongside other channels such as in-product messaging, sales outreach, and paid acquisition. Attribution models should account for this by tracking how email contributes across the user journey over time, even when it is not the final touchpoint before conversion or renewal.

Use Funnel-Based Measurement

A funnel-based approach makes attribution more actionable. By tracking how email interactions lead to product actions and ultimately to upgrades or renewals, teams can identify where email is accelerating progress and where users are stalling.

Build Lifecycle-Aligned Dashboards

Dashboards should reflect the SaaS lifecycle rather than isolated email metrics.

Useful views include cohorts grouped by email flow entry, activation rates by cohort, time to first key action, 30-, 60-, and 90-day retention, and upgrade rate differences between engaged and non-engaged users.

Compare Outcomes to Prove Impact

Comparative analysis helps demonstrate email’s true value. For example, comparing users who completed onboarding email sequences with those who did not can reveal retention or activation uplift that can be attributed directly to email programs.

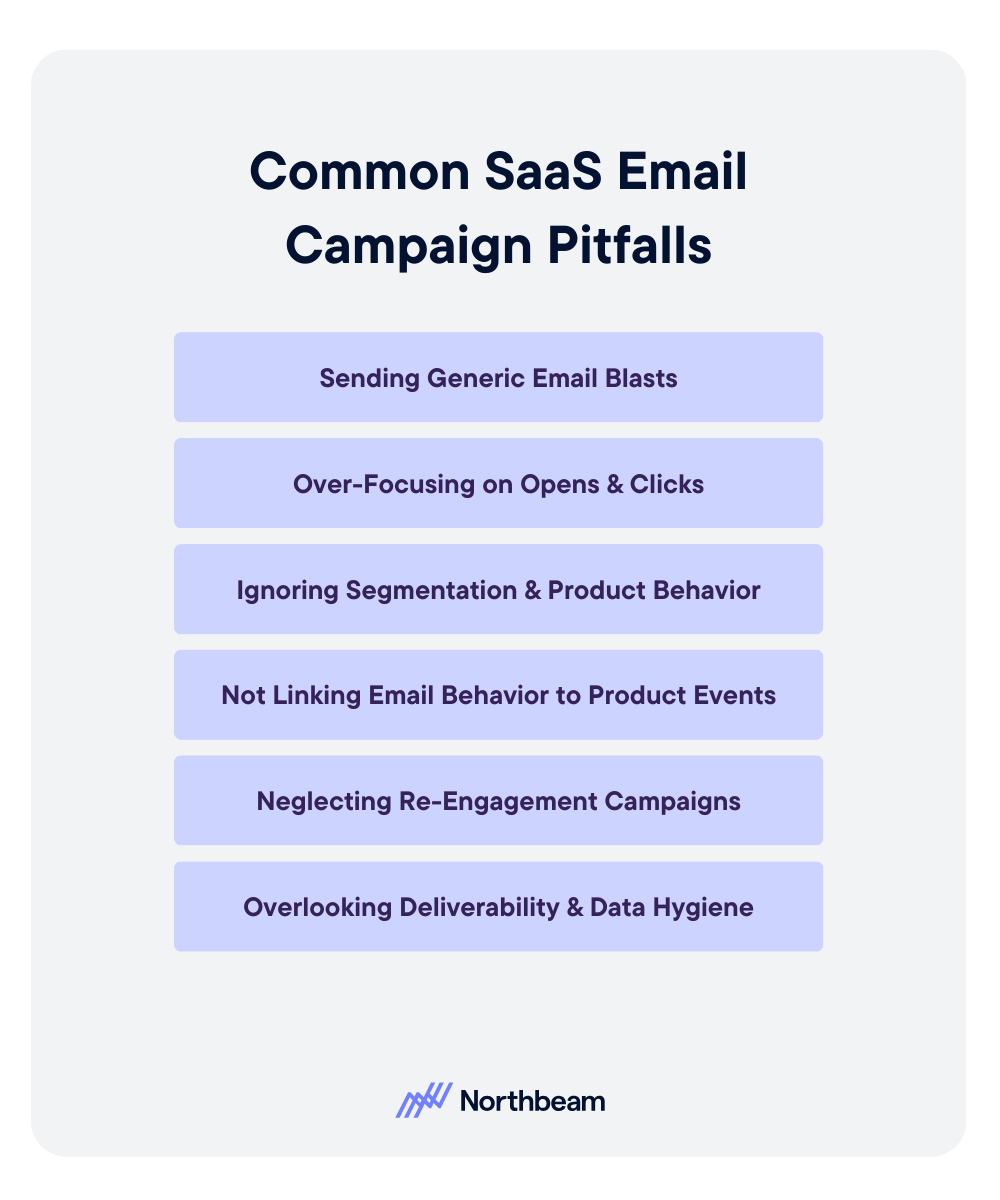

Common Pitfalls & What to Avoid

Even well-intentioned SaaS email programs can underperform if they fall into common traps that limit relevance, measurability, and long-term impact.

Sending Generic Email Blasts

Tailor your strategy to your specific goals, such as using email campaigns to reduce churn in SaaS. Relying on one-size-fits-all campaigns instead of lifecycle-aware, behavior-based flows leads to lower user engagement.

Over-Focusing on Opens and Clicks

Measuring success only through user engagement on SaaS emails obscures whether emails are driving activation, adoption, or retention.

Ignoring Segmentation and Product Behavior

Failing to tailor emails based on lifecycle stage, usage patterns, or plan tier results in irrelevant messaging and missed opportunities.

Not Linking Email Behavior to Product Events

When email activity is disconnected from product data, teams cannot accurately measure the impact on activation, upgrades, or retention from SaaS email marketing campaigns.

Neglecting Re-engagement Campaigns

Without investing in how to keep SaaS users engaged via email with proactive win-back and inactivity-triggered emails, users can disengage and churn without clear warning signals.

Overlooking Deliverability and Data Hygiene

Poor list management, outdated data, or deliverability issues can suppress open rates and undermine even well-designed email programs.

A Practical Example of Lifecycle-Driven SaaS Email

Consider a company implementing a SaaS email marketing strategy for onboarding free trial users in order to improve long-term retention.

Rather than relying on a single welcome message, the team designed a structured five-email onboarding sequence aligned to early product behavior:

- Day 1: Users received a welcome email

- Day 2: Users received a setup guidance email

- Day 4: Users received an email introducing a core featured tied to the product’s primary value

- Day 7: Users who had not yet tried the feature received a behavioral checkpoint email highlighting what they were missing

- Day 14: Engaged users were presented with a targeted upgrade offer

By tracking product usage alongside email engagement, the team found that users who reached the day four feature email converted to paid plans at a 40% higher rate within 30 days compared to those who did not.

Beyond onboarding, the company applied similar logic to retention. Users who went inactive for 14 days triggered a re-engagement email offering a short webinar or feature tip, which ultimately reduced churn by 12%.

Measurement also informed iteration. Analysis showed that users from one acquisition source consistently activated at lower rates. In response, the team created a separate onboarding path tailored to that cohort’s needs and expectations.

Using product analytics combined with email tagging, they demonstrated that users who completed the full onboarding flow achieved 60% higher retention at 90 days versus the baseline, clearly linking lifecycle email strategy to measurable business impact.

Turning Email Into a SaaS Growth Engine

Email remains one of the most powerful levers SaaS teams have to influence user behavior after signup. When aligned to the lifecycle and informed by product data, email can accelerate activation, deepen engagement, and protect long-term revenue through retention and renewal.

By defining clear goals, segmenting thoughtfully, and continuously testing and iterating based on real user behavior, SaaS teams can build email programs that scale alongside the product and deliver lasting impact across the customer lifecycle.

Ensuring Data Reliability: How to Trust Your Marketing Analytics

Marketing teams rely on analytics to make high-stakes decisions about budget allocation, campaign optimization, and attribution. But when marketing data is inaccurate, incomplete, or inconsistent, those decisions quickly become guesswork.

Data reliability is what turns dashboards into decision tools. It ensures that performance metrics reflect reality, attribution models assign credit correctly, and stakeholders can trust the insights guiding strategy.

In this article, we break down how to ensure data reliability in marketing analytics, where it commonly fails, and how teams can build systems and processes that support trusted, actionable insights at scale.

Why Data Reliability Matters in Marketing Analytics

Marketing teams make decisions every day based on what their data appears to be telling them. All of those decisions depend on one foundational assumption: that the data itself can be trusted.

Data reliability refers to the extent to which marketing data is accurate, complete, consistent, timely, and usable as a dependable basis for decision-making.

Reliable data reflects reality. It captures the full picture across channels, updates quickly enough to guide action, and uses consistent definitions so teams are not comparing mismatched numbers.

When those conditions are met, analytics becomes a decision engine rather than a reporting exercise.

But without reliable data, even sophisticated tools and models produce misleading outputs. This lack of trust is widespread. In one industry survey, more than a third of CMOs reported that they do not fully trust their marketing data.

When data reliability fails, the consequences compound quickly:

- Budgets get misdirected toward channels that appear to perform well but do not actually drive incremental value

- Attribution models assign credit incorrectly, skewing future decisions

- Different teams report conflicting numbers, leading to debates about whose data is “right” rather than what action to take

- Over time, stakeholders lose confidence not just in the data, but in marketing’s ability to justify its impact

Reliable data is not a nice-to-have. It is the foundation of credible marketing analytics reliability.

Key Dimensions of Reliable Marketing Data

Reliable marketing data is the result of several foundational qualities working together. When one dimension breaks down, trust in the entire dataset weakens.

Understanding these dimensions helps teams diagnose where reliability issues originate and what needs to be fixed to restore confidence.

Accuracy

Accuracy answers a basic question: does the data reflect correct values? This includes spend, impressions, clicks, conversions, and revenue.

Inaccurate data often stems from tracking misfires, platform discrepancies, or flawed integrations. Even small inaccuracies can cascade into large decision errors when multiplied across campaigns and budgets.

Completeness

Completeness measures whether all relevant data points are captured. Missing channels, dropped conversion events, or partial funnel visibility create blind spots that skew performance analysis.

Data can appear clean while still being incomplete, which makes this one of the most deceptive reliability issues.

Consistency and Format

Consistency ensures that metrics mean the same thing everywhere they appear. A “conversion” should not be defined differently across platforms, dashboards, or time periods.

Inconsistent definitions make comparisons unreliable and erode trust between teams reviewing the same performance from different views.

Timeliness and Freshness

Timely data arrives quickly enough to inform decisions. Stale or delayed data forces teams to react to yesterday’s performance instead of today’s reality. Freshness is especially critical for pacing, optimization, and in-flight campaign adjustments.

Usability and Accessibility

Data must be easy to access, interpret, and act on. If insights require manual extraction, complex explanations, or technical translation, trust breaks down and adoption suffers.

Validity

Validity confirms that transformations, joins, and logic rules correctly represent real-world behavior. Even accurate and complete source data can become unreliable if the underlying data logic is flawed.

Common Sources of Unreliable Marketing Data

Understanding where marketing data typically breaks down makes it easier to prevent errors before they undermine reporting, attribution, and decision-making.

Inconsistent Definitions Across Platforms

One of the most common issues is inconsistent metric definitions.

As mentioned in the last section, a “conversion” might represent a purchase in one system, a form fill in another, and a modeled event elsewhere.

When teams compare or combine these numbers without alignment, the resulting analysis is fundamentally flawed.

Disparate Systems and Siloed Data

Marketing data often lives across multiple platforms, including ad networks, analytics tools, and CRM systems. Without proper integration, these systems produce mismatched views of performance.

Spend, clicks, and revenue fail to line up, leading to confusion and manual reconciliation that erodes trust.

Manual Data Handling

Copying and pasting data between spreadsheets, tools, or decks introduces human error and delays. Manual workflows also make it difficult to audit changes or understand how numbers were calculated, reducing transparency and repeatability.

Outdated or Stale Data

Making decisions based on stale or outdated data is especially damaging for campaign optimization, pacing, and budget reallocation, where timing directly impacts results.

Weak Data Governance

Without clear ownership, standardized processes, and validation checks, reliability becomes reactive rather than systematic.

Poor governance allows small inconsistencies to persist, making it harder to identify the source of errors and restore confidence once trust is lost.

Building a Framework for Reliable Marketing Analytics

Reliable marketing data requires intentional systems, clear ownership, and repeatable processes that make trust the default rather than the exception.

The following steps help teams move from ad hoc fixes to a durable reliability framework:

1. Define a Single Source of Truth

Establish a shared, governed view of core marketing metrics. This does not always mean one tool, but it does mean one agreed-upon pipeline, set of definitions, and reporting layer that everyone uses when making decisions.

2. Establish Strong Data Governance

Clear governance ensures consistency over time. Define metric definitions, naming conventions, ownership, access controls, and audit processes.

When responsibilities are explicit, issues are easier to trace and resolve.

3. Implement Data Validation and Cleaning

Regular validation prevents small issues from becoming systemic failures. Use automated checks to flag missing values, duplicates, unexpected spikes, or format mismatches. Schedule audits to confirm data remains accurate as systems evolve.

4. Automate and Standardize Pipelines

Automation reduces human error and improves repeatability. ETL or ELT pipelines help integrate data across platforms while enforcing consistent transformations, making outputs easier to trust and scale.

5. Set Up Monitoring and Alerting

Monitoring turns reliability into an ongoing process. Alerts for anomalies, failed data feeds, or sudden metric shifts allow teams to respond quickly before flawed data influences decisions.

6. Provide Access and Transparency

Dashboards should not just show numbers. They should help stakeholders understand where data comes from, how it is calculated, and when it was last updated.

7. Build a Culture of Data Trust

Technical fixes alone are not enough. Invest in training, involve marketing teams in data design, and reinforce data-informed decision-making across the organization.

Reporting & Attribution: Ensuring Trust in Your Marketing Metrics

Reporting and attribution outputs directly influence budget decisions, performance narratives, and executive confidence. If the underlying data is unreliable, even the most advanced attribution models will produce misleading results, giving teams a false sense of precision.

Here’s how to build trust in marketing data for attribution and reporting:

Confirm Reliable Inputs

Attribution models, whether last-click, multi-touch, or marketing mix modeling, are only as strong as the data they consume. Flawed channel data, missing conversions, or inconsistent definitions invalidate attribution outputs, regardless of the sophistication of the model itself.

Cleanly Link Spend to Outcomes

Reliable reporting depends on clean, validated connections between ad spend, impressions, clicks, conversions, and revenue.

When these elements are not accurately joined, channels receive too much or too little credit, leading to distorted performance assessments and misallocated budget.

Run Checks Before Attribution

Before running attribution analyses, teams should validate the underlying data. This includes comparing platform-reported metrics, checking funnel logic, and testing for anomalies or sudden shifts that signal data issues rather than real performance changes.

Surface Data Quality in Reports

Trust increases when stakeholders understand data quality. Including reliability indicators such as data completeness, refresh latency, known gaps, or confidence levels helps leaders interpret results appropriately and reduces friction when numbers change.

Validate Insights with Incrementality

Attribution outputs should be tested, not taken at face value. Incrementality metrics, hold-out tests, and controlled experiments help confirm whether attributed channels are truly driving growth, providing a reality check that strengthens confidence in reported results.

Challenges & Trade-Offs

Putting in place marketing data reliability best practices for analytics teams delivers long-term value, but it also introduces real trade-offs that teams need to plan for.

Understanding these challenges upfront helps set realistic expectations and prevents reliability efforts from stalling or losing stakeholder support:

Slower Reporting in the Short Term

Investments in data cleaning, validation, and governance can initially slow down reporting cycles. Teams may need to pause automation or delay insights while issues are corrected, which can feel uncomfortable in fast-moving environments.

Risk of Over-Optimizing for Perfection

Chasing perfectly clean data can lead to analysis paralysis. Not every decision requires flawless inputs. Teams must define what “good enough” looks like for different use cases and avoid blocking action unnecessarily.

Constant Platform and Privacy Changes

Marketing ecosystems evolve quickly. Privacy regulations, identity changes, and platform updates can break existing pipelines, making data reliability an ongoing effort rather than a one-time fix.

Stakeholder Impatience

Leaders and channel owners may push for immediate answers, even when data quality is uncertain. Without alignment, this pressure can undermine reliability initiatives and reintroduce manual shortcuts.

Balancing Speed with Trust

The core tension is between agility and rigor. Effective teams design systems that surface reliable insights quickly, while clearly signaling when data should be treated with caution.

How Clean Data Changes the Story

At first glance, a brand’s paid media performance looked strong. Cost per click was low, reported ROAS was high, and attribution dashboards suggested several channels were outperforming expectations.

Based on those numbers, the team began preparing to scale spend.

But after a deeper review, they discovered multiple data reliability issues. Duplicate conversions were inflating results, and delayed data refreshes were masking recent performance drops.

Once those integration errors were corrected and pipelines were cleaned, reported ROAS fell by 15%. The numbers looked worse, but they were finally accurate.

Rather than reverting to the old view, the team invested in reliability. They implemented clearer data governance, standardized pipelines, and daily validation alerts to catch issues early.

Dashboards were updated to include a simple data health score that surfaced completeness, latency, and error rates. Failed refreshes were annotated, and metric definitions were versioned so changes were transparent.

With trustworthy data in place, decision-making improved.

The team paused channels that had been benefiting from misattribution, reallocated budget toward channels that were genuinely driving incremental value, and scaled spend with confidence.

Growth stabilized, and stakeholder trust in marketing analytics was restored.

Turning Data Reliability Into Action

Building reliable marketing data starts with a focused set of actions that make reliability visible, repeatable, and accountable across teams.

- Run a data reliability audit: Map your core marketing and attribution metrics end to end. Identify where data originates, how it flows through systems, and where it is transformed. Score each metric on accuracy, completeness, freshness, and consistency to surface risk areas.

- Prioritize the biggest failure points: Focus on the top two or three issues causing the most damage, such as delayed refreshes, inconsistent definitions, or manual handoffs. Assign clear owners and timelines so fixes actually get implemented.

- Add a data health dashboard: Make reliability visible. Track indicators like data freshness, error rates, missing fields, and completeness alongside performance metrics. This creates shared awareness and prevents silent failures.

- Include reliability signals in stakeholder reporting: Help leadership interpret results by surfacing confidence indicators directly in reports. When data has known gaps or delays, flag them clearly so decisions are made with appropriate context.

- Establish a regular review cadence: Data reliability is not a one-time project. Revisit definitions, pipelines, and checks monthly or quarterly to adapt to new platforms, campaigns, and measurement needs.

By operationalizing data reliability, teams can move faster with confidence and ensure performance insights truly reflect reality.

Follow us

.svg)